Intro

It's true, the 3D sofware market is ruled by Autodesk. People complaining that Maya's humanIK is a half-hearted, hacky implementation of what MotionBuilder does so well, are right. People complaining that Maya doesn't get better modeling tools because of 3DSMax, people complaining that 3DSMax's CA tools are kinda lame compared to what you get in Maya, and even who complains that nothing in Maya or Max hold a candle to what Softimage's ICE technology can make - also have a point. Sadly for the evolution of the industry, there's no longer any 3D software war out there, whoever thinks so is making a fool of himself. It's basically all Autodesk property now, they can do whatever they want with it - including make sure that these softwares keep as segmented as possible, so as to maximize sales of all the packages for each client. Does that make any commercial sense to you? That considered, there is only a handful of full-featured contenders out there against the Autodesk "one company to rule'em all" approach - Cinema4D, Houdini and Lightwave 3D being the most notable ones. Among them Lightwave is, by far, the most mature platform, with no less than two decades of technology development to back it up.

I'm personally as much of an "ancient lightwave user" as anyone can be, having been a beta tester of the software (0.9beta or so) back in the joyful Amiga 500 days. I still have fond memories of the old lightwave-list, I think we had just 10-20 posts per day back then, and the developers (read: the two coders) had the opportunity to reply to each one of us. Good ol'times.

Although it seems like yesterday to me, twenty years have passed since then, and in that period of time Lightwave took many forms and shapes. It went from "the Video Toaster 3D program" to an obscure 3D PC software right after leaving the Amiga platform, to a "niche" top studios 3D application, responsible for the amazing effects of many great TV and movie productions. As evolved as the platform eventually became, the fact is that Lightwave's 9.6 release (early 2009) didn't offer as many enhancements as the community, both amateur and professional, expected. This has split the community apart sensibly, since most started using other 3D packages, either in parallel with Lightwave or substituting it altogether. The fact that the Autodesk-standard FBX file format importer/exporter wasn't as compatible as it should isolated Lightwave even more from the other 3D tools, a critical mistake in an Autodesk-owned 3D world. It was simply less of a hassle to move the whole pipeline to Maya, Softimage or 3DSMax.

By the way, I know this is not exactly short, yet I call this a mini-review because to fully review such a vast application like Lightwave anyone serious about it would take at least a couple months, and the resulting review would be 5x bigger than this. Instead I'll be mostly focusing on the main improvements and what has changed in the software that can actually make artist's lives easier. During this review I'll also make some comparisons between Lightwave and Maya, not only because the second is the other full-fledged 3D application that I'm most intimate with, but also because Maya is the major-3D-studio app of choice, making it a kind of a benchmark.

Character Animation Improvements

Character Animation has long been considered the achiles heel of Lightwave 3D. Version 9.5 included multiple enhancements in this specific area, but poor documentation and the lack of more advanced tutorials to perform high-quality rigging in Lightwave - like the ones you can easily find for Maya in the likes of Digital Tutors and Gnomonology videos - ended up making wannabe Lightwave character animators "knowledge orphans". It literally took man-years and a number of talented and resourceful Lightwave artists to fully decypher things like the inner differences between joints and bones, or how to properly integrate IK and the arcane IKBooster interfaces seemlessly enough for production purposes. Thanks to the efforts of these brilliant lightwavers, nowadays there's little to nothing that can be done in Maya or Softimage that can't be done easier/faster in Lightwave. IK/FK switching, for instance, is a default animatable setting in Lightwave's IK, while Maya riggers waste precious time to have the same setting in their rigs. Even so, there's still a lack of "official" resources for most of the Lightwave CA affairs, and training material is relatively scarce. Nevertheless, the Lightwave community forum is probably the most warm and welcoming 3D community in the scene, it's very easy to find intelligent and extensive discussions about pretty much any topic, just as it's very common getting help from the biggest experts in Lightwave. Ah, the beauty and warmth of un-bloated communities.

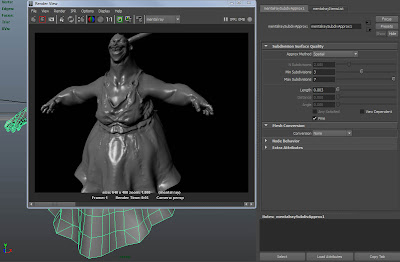

Back to the CA matter, Lightwave's approach to skinning is remarkably different from that of other programs, and probably its biggest highlight. You can interactively rig anything instead of having to fiddle with weightmaps for hours before getting any kind of decent deformation. For instance, while testing a rig, I've deleted a handful of unmapped joints right in the middle of the animation process. It all simply worked as expected - or unexpectedly, if you consider the "norm" of other 3D CA tools except for Project:Messiah. Pose deformation (like a bulging muscle) can be easily achieved both procedurally and interactively using the built-in SoftFX component - also exceptionally competent for advanced effects like soft bodies and hair physics.

This all sounds great, but it's nothing new to be honest, it's just an intro for this final paragraph if you're not aware of how things work in the lightwave-world. The point is, the biggest drawbacks in Lightwave for Character Animation up to 9.6 were: 1) the slow deformation speed, making the animation process slower and tedious than it should and requiring workarounds like proxy meshes and 2) The half-baked FBX import-export plugin, that couldn't really handle some advanced settings. I'm glad to say that this is now all in the past. Not only is the deformation speed much much faster, on par with Maya 2011, but the FBX plugin has been vastly enhanced, providing seamless integrations with MotionBuilder (aka Mobu) if you require its Human IK rigging or advanced mocap editing features. It's worth to note that Lightwave does have some nice Mocap editing features with its IKBooster component, for most applications that might be enough and free you from the steep Mobu purchase cost. As for raw animation deformation performance MotionBuilder is still the king of the world, but it's worth mentioning that Mobu has no real-time subdivision capability, nor a magnificent VPR that shows you interactable x-ray bones overlaid on top of a fully rendered scene.

The renderer

For those inexperienced with Lightwave 3D, if I could describe its rendering in short I'd say something like "The only full 3D package's native renderer worth using". Compared to the other major players (Maya, Cinema4D, 3DSMax and XSI) native renderers, it's ridiculously superior. Sure, some will rush and say Mental Ray is now native for Maya for instance - I beg to differ. Some advanced plugins require major tuning to work properly with Mental Ray, and even when they do the result is largely different from the native renderer. When I say "major tuning" I mean "generous chunks of time wasted tweaking shader node networks and playing trial-and-error with slow and zero-feedback non-native renderers".

I still remember the days when Lightwave 3D sold one license for each fPrime license sold, and not the other way around. fPrime was the first of its breed, not exactly a VPR since it has no actual viewport overlay. You usually had its window opened as an instant render feedback while manipulating scene itens in the main application window. This worked really well, but it wasn't completely compatible with everything Lightwave had to offer, like Hypervoxels (for advanced volumetric particle effects) and even the most advanced shaders provided in the latest v9 releases. Slowly Worley Labs caught up with most of it, but most of the time you ended up wasting some of your time in the end with actual Lightwave renders, which was a bummer.

And here's Lightwave 10 VPR. Trust my words, nothing I've ever seen compares to that. Coming from a huge struggle in a complex Maya scene just trying to tweak textures and get the intended results, this is seriously night and day difference. The performance is outrageous, humbling even the mighty Modo interactive renderer, and that goes without saying that Modo (even with the just released 501 update) has no character animation tools. VPR is able to outperform even the regular Render, which got huge speed buffs on its own - and, most importantly, whatever you get from VPR is exactly what you get out of Lightwave render buffers. Oh, the joy!

If you want to consider some alternative viewport real-time renderers (VPR) for Maya, you'd better be warned that you won't have a high success ratio with 3rd party plugins. Of course you might get lucky, that's just an alert so you're not caught with your pants down when the client asks some specific effect and you think that shiny new real-time render plugin will save the day and make you look good. It might work for all you need in one or another scene, but hardly with the interactivity you'd want under a tight deadline. Alas, that means a great chance of missing that deadline and end up looking bad instead. My point is that having an 100% native VPR engine is not just amazing, it sets a new mark for consistency and reliability. You won't get this, for instance, out of the spanking new Arion or Octane render engines, the first being quite limited in terms of what it can render so far, and the second using some tricks to speed up rendering, tricks which will not only lower your render quality, but which generally lead to different preview results than what the final renders deliver.

One glitch I used to have with the otherwise outstanding LW renderer was with the crispness of the antialias. It either didn't AA well, or it did a way too soft AA. For photoreal rendering this is generally desired, but for toony rendering you really want some extra crispness to reach a more "Pixar-like" look. I got extremely pleased with the software new AA modes, including Lanczos and Mitchell Sharp filtering, I got very nice MentalRay-AA-like results with marginal render time increases.

One of the most glaring of this low-cost powerhouse renderer limitation - which has made many jump the boat off to boring pastures - is the lack of render passes. Render passes, the ability to output separate images with isolate diffuse, specular, shadow, etc, with a single render might seem unnecessary to the hardcore old hats (again, like me), but once you enjoy the pleasure of precisely tweaking every minor shade of your render in post production (After Effects, shake, etc), it's really hard to look back. Unfortunately, this important feature hasn't got into Lightwave 10, at least not natively. That said, there ae some limited buffer exporting features in its lastest incarnations, individual render buffers which can be vastly extended by some third party tools and libraries - all well worth researching.

Technology and Extensibility

It's important to mention that Lightwave 10 still uses the ancient original LW inner code structure, and inherits some of its limitations. The touted "CORE" technology announced last year still hasn't culminated in a full product mature enough to fully replace the current tech product, so Newtek wisely decided to migrate some of the key CORE improvements to Lightwave 9.6 and release it as Lightwave 10. It really takes time to re-invent the huge wheel which Lightwave has become, something that Newtek has vastly downplayed considering its initial release schedules. Yet this re-invention is much needed to allow things like an integrated editor - instead of two separate yet inter-communicating applications - and finally a modeling environment on par with the polyon-crunching leaders, namely Modo and 3DSMax.

Nevertheless, what seems like a drawback some times it's a huge advantage. The consistency and backwards compatibility of the Lightwave engine means you've got a ton of free and cheap plugins and lscripts (Lightwave's lower-power "MEL" equivalent) developed along all those years, most of them working and of huge help up to this day, be it for modeling, animating or special effects.

Conclusion

Newtek really expected to have a decent Lightwave Core release before the year's end. This wasn't possible, but at least they are to praise for avoiding the Autodesks low standards of releasing and charging users for pre-beta software in desperate need for Hotfixes that'll only come out months later. Maya 2011 anyone? It's hard to believe that even the much needed "component editor" in Maya wasn't fully operational when that program was released, and even after a couple hotfixes and one service pack that's still the most unstable and crashy major 3D application in the business. Trust me, even the finicky Modo 401 seems stable compared to it. In the other hand, Lightwave 10 has been stable for months now, and its version "10" wasn't released almost one year ahead of 2010. Ahem.

The fact is, on such a saturated and monotonic business environment, for Lightwave to recover some of its share - and, hopefully, gain new ground to assure further development - it can only rely on its new and unique features, besides what it already offered. Lightwave is a true fully integrated solution with CA tools, hair, rigid and soft dynamics, without any 3rd party plugins required, coupled with excellent quality renders - no MentalRay or V-Ray required for you to scratch your head about which material or shader works and which doesn't. VPR is an outstanding addition which completely revamps the way and the frequency in which we artists shape up the final resulting image - which, in the end of the day, it's all that matters. The new built-in 'Linear Workflow' lighting system is yet another powerful weapon in the Lightwave arsenal to achieve this exact purpose, and artists will surely take huge advantage from it.

To sum things up, this is the most powerful, most complete and most innovative Lightwave release in many years, fully honoring its traditions of the past when Allen Hastings and Stuart Ferguson were still leading the boat, pushing the 3D envelope and being the first to release high-end and industry-defining technology like radiosity, particle voxes and subdivision surfaces for personal computers.

It's great to have you back in your new shining armor, Lightwave. Such a warm feeling!